Another Network Forensic Tool for the Toolbox - Dshell

This is a guest diary written by Mr. William Glodek – Chief, Network Security Branch, U.S. Army Research Laboratory

As a network analysis practitioner, I analyze multiple gigabytes of pcap data across multiple files on a daily basis. I have encountered many challenges where the standard tools (tcpdump, tcpflow, Wireshark/tshark) were either not flexible enough or couldn’t be prototyped quickly enough to do specialized analyzes in a timely manner. Either the analysis couldn’t be done without recompiling the tool itself, or the plugin system was difficult to work with via command line tools.

Dshell, a Python-based network forensic analysis framework developed by the U.S. Army Research Laboratory, can help make that job a little easier [1]. The framework handles stream reassembly of both IPv4 and IPv6 network traffic and also includes geolocation and IP-to-ASN mapping data for each connection. The framework also enables development of network analysis plug-ins that are designed to aid in the understanding of network traffic and present results to the user in a concise, useful manner by allowing users to parse and present data of interest from multiple levels of the network stack. Since Dshell is written entirely in Python, the entire code base can be customized to particular problems quickly and easily; from tweaking an existing decoder to extract slightly different information from existing protocols, to writing a new parser for a completely novel protocol. Here are two scenarios where Dshell has decreased the time required to identify and respond to network forensic challenges.

- Malware authors will frequently embed a domain name in a piece of malware for improved command and control or resiliency to security countermeasures such as IP blocking. When the attackers have completed their objective for the day, they minimize the network activity of the malware by updating the DNS record for the hostile domain to point to a non-Internet routable IP address (ex. 127.0.0.1). When faced with hundreds or thousands of DNS requests/responses per hour, how can I find only the domains that resolve to a non-routable IP address?

Dshell> decode –d reservedips *.pcap

The “reservedips” module will find all of the DNS request/response pairs for domains that resolve to a non-routable IP address, and display them on a single line. By having each result displayed on a single line, I can utilize other command line utilities like awk or grep to further filter the results. Dshell can also present the output in CSV format, which may be imported into many Security Event and Incident Management (SEIM) tools or other analytic platforms.

- A drive-by-download attack is successful and a malicious executable is downloaded [2]. I need to find the network flow of the download of the malicious executable and extract the executable from the network traffic.

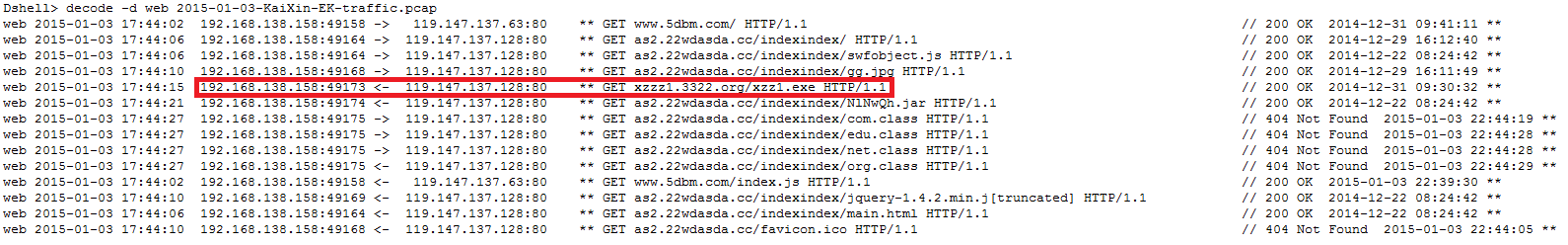

Using the “web” module, I can inspect all the web traffic contained in the sample file. In the example below, a request for ‘xzz1.exe’ with a successful server response is likely the malicious file.

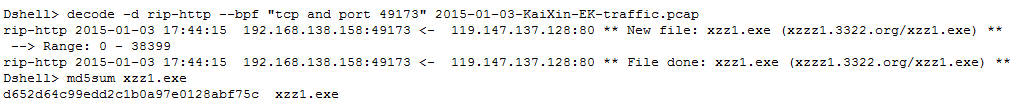

I can then extract the executable from the network traffic by using the “rip-http” module. The “rip-http” module will reassemble the IP/TCP/HTTP stream, identify the filename being requested, strip the HTTP headers, and write the data to disk with the appropriate filename.

There are additional modules within the Dshell framework to solve other challenges faced with network forensics. The ability to rapidly develop and share analytical modules is a core strength of Dshell. If you are interested in using or contributing to Dshell, please visit the project at https://github.com/USArmyResearchLab/Dshell.

[1] Dshell – https://github.com/USArmyResearchLab/Dshell

[2] http://malware-traffic-analysis.net/2015/01/03/index.html

What is using this library?

Last year with OpenSSL, and this year with the GHOST glibc vulnerability, the question came up about what piece of software is using what specific library. This is a particular challenging inventory problem. Most software does not document well all of it's dependencies. Libraries can be statically compiled into a binary, or they can be loaded dynamically. In addition, updating a library on disk may not always be sufficient if a particular piece of software does ues a library that is already loaded in memory.

To solve the first problem, there is "ldd". ldd will tell you what libraries will be loaded by a particular piece of software. For example:

$ ldd /bin/bash

linux-vdso.so.1 => (0x00007fff9677e000)

libtinfo.so.5 => /lib64/libtinfo.so.5 (0x00007fa397b43000)

libdl.so.2 => /lib64/libdl.so.2 (0x00007fa39793f000)

libc.so.6 => /lib64/libc.so.6 (0x00007fa3975aa000)

/lib64/ld-linux-x86-64.so.2 (0x00007fa397d72000)

The first line (linux-vdso) doesn't point to an actual library, but to the "Virtual Dynamic Shared Object" which represents kernel routines. Whenever you see an "arrow" (=>), it indicates that there is a symlink to a specific library that is being used. Another option that works quite well for shared libraries is "readelf". e.g. "readelf -d /bin/bash" will list

To list libraries currently loaded, and programs that are using them, you can use lsof.

One trick with lsof is that it may appreviate command names to make the output look better. To fix this, use the "+c 0" option (or use a number that is long enough to help you find the right command). You can grep the output for the library you are interested in. For example:

# lsof +c 0 | grep libc-

init 1 root mem REG 253,0 1726296 131285 /lib64/libc-2.5.so

udevd 836 root mem REG 253,0 131078 /lib64/libc-2.5.so (path inode=131285)

anvil 987 postfix mem REG 253,0 1726296 131285 /lib64/libc-2.5.so

The first column will tell you what processes need restarting. Also the number in front of the library (131285) is the inode for the library file. As you may note above, the inode is different for some of these libraries, indicating that the library changed. These are the processes that need restarting.

It is always best to reboot a system to not have to worry about remnant bad code staying in memory.

In addition, if your system uses RPMs, you can find dependencies using the RPM. But this information is not always complete.

Comments