Querying Spamhaus for IP reputation

Way back in 2018 I posted a diary describing how I have been using the Neutrino API to do IP reputation checks. In the subsequent 2+ years that python script has evolved some which hopefully I can go over at some point in the future, but for now I would like to show you the most recent capability I added into that script.

As most of you know, The Spamhaus Project has been forefront in the fight against Spam for over 20 years. But did you know they provide a DNS query based api that can be used, for low volume non-commercial use, to query all of the Spamhaus blocklists at once. The interface is zen.spamhaus.org. Because it is DNS query based you can perform the query using nslookup or dig and the returned IP address is the return code.

For example say we want to test whether or not 196.16.11.222 is on a Spamhaus list. First because the interface takes a DNS query we would need to reverse the IP address and then add .zen.spamhaus.org. i.e. the DNS query would look like 222.11.16.196.zen.spamhaus.org

$ nslookup 222.11.16.196.zen.spamhaus.org

Non-authoritative answer:

Name: 222.11.16.196.zen.spamhaus.org

Address: 127.0.0.2

Name: 222.11.16.196.zen.spamhaus.org

Address: 127.0.0.9

or with dig...

$ dig 222.11.16.196.zen.spamhaus.org

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7_9.4 <<>> 222.11.16.196.zen.spamhaus.org

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 64622

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;222.11.16.196.zen.spamhaus.org. IN A

;; ANSWER SECTION:

222.11.16.196.zen.spamhaus.org. 41 IN A 127.0.0.2

222.11.16.196.zen.spamhaus.org. 41 IN A 127.0.0.9

As you can see in both cases the DNS response returned two results. 127.0.0.2 and 127.0.0.9. In practicality just the fact that you receive return codes tells you that this IP is on Spamhaus's lists, and has recently been involved in naughty behavior. However to know which Spamhaus lists in particular the return codes apply to:

Return Code Zone Description

127.0.0.2 SBL Spamhaus SBL Data

127.0.0.3 SBL Spamhaus SBL CSS Data

127.0.0.4 XBL CBL Data

127.0.0.9 SBL Spamhaus DROP/EDROP Data

127.0.0.10 PBL ISP Maintained

127.0.0.11 PBL Spamhaus Maintained

If you query an IP which is not on any Spamhaus lists the result will be Non-Existent Domain (NXDOMAIN)

nslookup 222.11.16.1.zen.spamhaus.org

** server can't find 222.11.16.1.zen.spamhaus.org: NXDOMAIN

I have created a Python script which performs this lookup and have integrated this code into my ip reputation script.

$ python3 queryspamhaus.py 196.16.11.222

196.16.11.222 127.0.0.2 ['SBL']

$ python3 queryspamhaus.py 1.16.11.222

1.16.11.222 0 ['Not Found']

The script does have a bug. The socket.gethostbyname() function only returns one result, so is returning an incomplete result for IPs which are on multiple Spamhaus lists. Since usually all I am looking for is if the IP is on any list I have never bothered to research how to fix this bug.

For those of you who are interested, the script is below. As usual, I only build these scripts for my own use/research, so a real python programmer could very likely code something better.

#!/usr/bin/env/python3

#

# queryspamhaus.py

import os

import sys, getopt, argparse

import socket

def check_spamhaus(ip):

hostname = ".".join(ip.split(".")[::-1]) + ".zen.spamhaus.org"

try:

result = socket.gethostbyname(hostname)

except socket.error:

result = 0

rdict = {"127.0.0.2": ["SBL"],

"127.0.0.3": ["SBL CSS"],

"127.0.0.4": ["XBL"],

"127.0.0.6": ["XBL"],

"127.0.0.7": ["XBL"],

"127.0.0.9": ["SBL"],

"127.0.0.10": ["PBL"],

"127.0.0.11": ["PBL"],

0 : ["Not Found"]

}

return result, rdict[result]

def main():

parser = argparse.ArgumentParser()

parser.add_argument('IP', help="IP address")

args=parser.parse_args()

ip=args.IP

result,tresult = check_spamhaus(ip)

print('{} {} {}'.format(ip, result, tresult))

main()

-- Rick Wanner MSISE - rwanner at isc dot sans dot edu - Twitter:namedeplume (Protected)

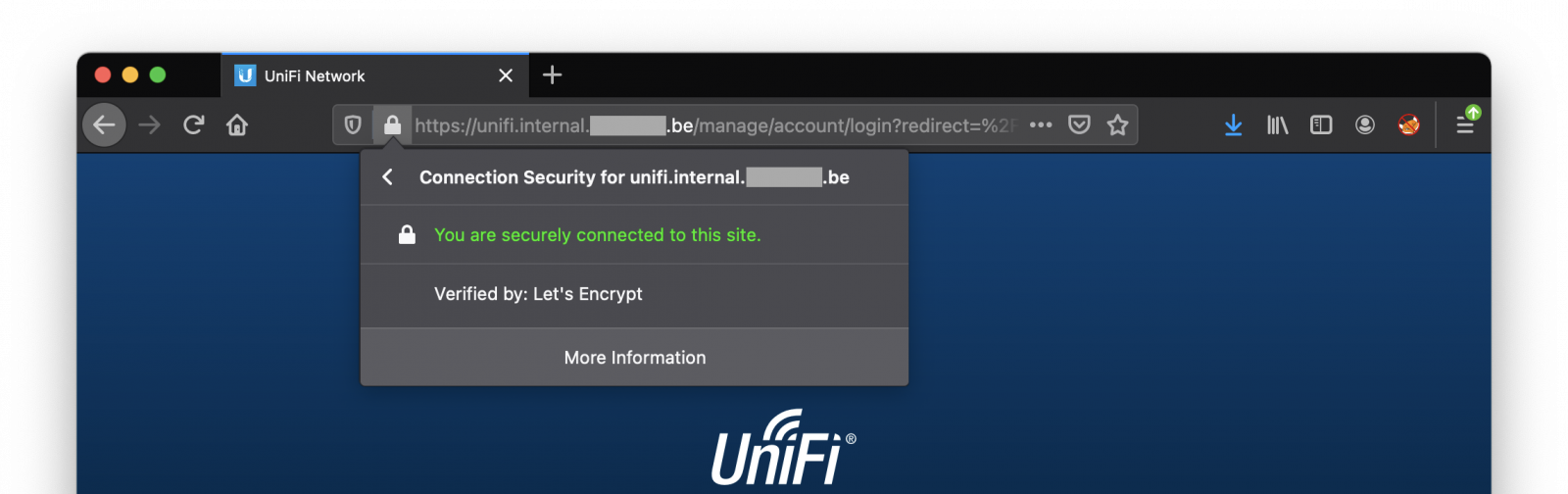

HTTPS Support for All Internal Services

SSL/TLS has been on stage for a while with deprecated protocols[1], free certificates for everybody[2]. The landscape is changing to force more and more people to switch to encrypted communications and this is good! Like Johannes explained yesterday[3], Chrome 90 will now append "https://" by default in the navigation bar. Yesterday diary covered the deployment of your own internal CA to generate certificates and switch everything to secure communications. This is a good point. Especially, by deploying your own root CA, you will add an extra string to your securitybow: SSL interception and inspection.

But sometimes, you could face other issues:

- If you have guests on your network, they won't have the root CA installed and will receive annoying messages

- If you have very old devices or "closed" devices (like all kind of IoT gadgets), it could be difficult to switch them to HTTPS.

On my network, I'm still using Let's Encrypt but to generate certificates for internal hostname. To bypass the reconfiguration of "old devices", I'm concentrating all the traffic behind a Traefik[4] reverse-proxy. Here is my setup:

.png)

My IoT devices and facilities (printers, cameras, lights) are connected to a dedicated VLAN with restricted capabilities. As you can see, URLs to access them can be on top of HTTP, HTTPS, use standard ports or exotic ports. A Traefik reverse-proxy is installed on the IoT VLAN and accessible from clients only through TCP/443. Access to the "services" is provided through easy to remember URLs (https://service-a.internal.mydomain.be, etc).

From an HTTP point of view, Traefik is deployed in a standard way (in a Docker in my case). The following configuration is added to let it handle the certificates:

# Enable ACME

certificatesResolvers:

le:

acme:

email: xavier@<redacted>.be

storage: /etc/traefik/acme.json

dnsChallenge:

provider: ovh

delayBeforeCheck: 10

resolvers:

- "8.8.8.8:53"

- "8.8.4.4:53"

There is one major requirement for this setup: You need to use a valid domain name (read: a publicly registered domain) to generate internal URL (in my case, "mydomain.be") and the domain must be hosted at a provider that provides an API to manage the DNS zone (in my case, OVH). This is required by the DNS authentication mechanism that we will use. Every new certificate generation will requite a specific DNS record to be created through the API:

_acme-challenge.service-a.internal.mydomain.be

The subdomain is your preferred choice ("internal", "dmz", ...), be imaginative!

For all services running in Docker containers, Traefik is able to detect them and generate certificates on the fly. For other services like IoT devices, you just create a new config in Traefik, per service:

http:

services:

service_cam1:

loadBalancer:

servers:

- url: "https://172.16.0.155:8443/"

routers:

router_cam1:

rule: Host("cam1.internal.mydomain.be")

entryPoints: [ "websecure" ]

service: service_cam1

tls:

certResolver: le

You can instruct Traefik to monitor new configuration files and automatically load them:

# Enable automatic reload of the config

providers:

file:

directory: /etc/traefik/hosts/

watch: true

Now you are ready to deploy all your HTTPS internal URL and map them to your gadgets!

Of course, you have to maintain an internal DNS zone with records pointing to your Traefik instance.

Warning: Some services accessed through this kind of setup may require configuration tuning. By example, search for parameters like "base URL" and changed to reflex the URL that you're using with Traefik. More details about ACME support is available here[5] (with a list of all supported DNS providers).

[1] https://isc.sans.edu/forums/diary/Old+TLS+versions+gone+but+not+forgotten+well+not+really+gone+either/27260

[2] https://letsencrypt.org

[3] https://isc.sans.edu/forums/diary/Why+and+How+You+Should+be+Using+an+Internal+Certificate+Authority/27314/

[4] https://traefik.io

[5] https://doc.traefik.io/traefik/https/acme/

Xavier Mertens (@xme)

Senior ISC Handler - Freelance Cyber Security Consultant

PGP Key

Comments