DShield Honeypot Maintenance and Data Retention

Some honeypot changes can maintain more local data for analysis, ease the process of analysis and collect new data. This diary will outline some tasks I perform to get more out of my honeypots:

- add additional logging options to dshield.ini

- make copies of cowrie JSON logs

- make copies of web honeypot JSON logs

- process cowrie logs with cowrieprocessor [1]

- upload new files to virustotal

- capture PCAP data using tcpdump

- backup honeypot data

DShield Data Readily Available

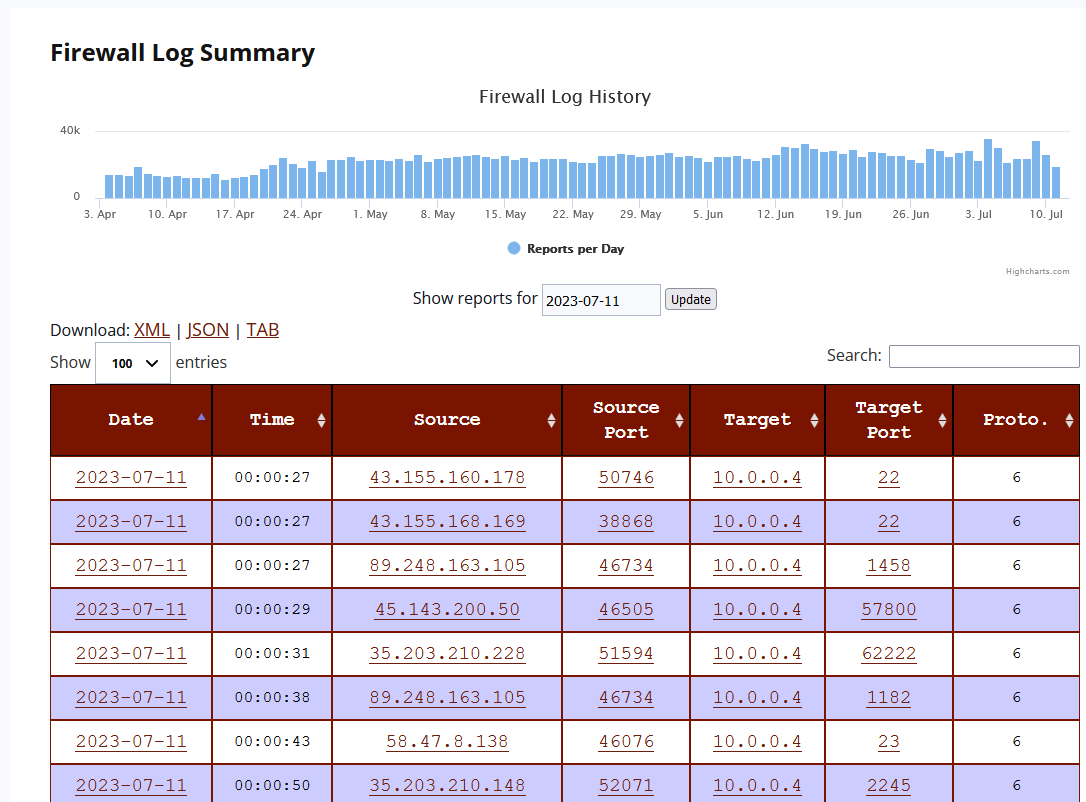

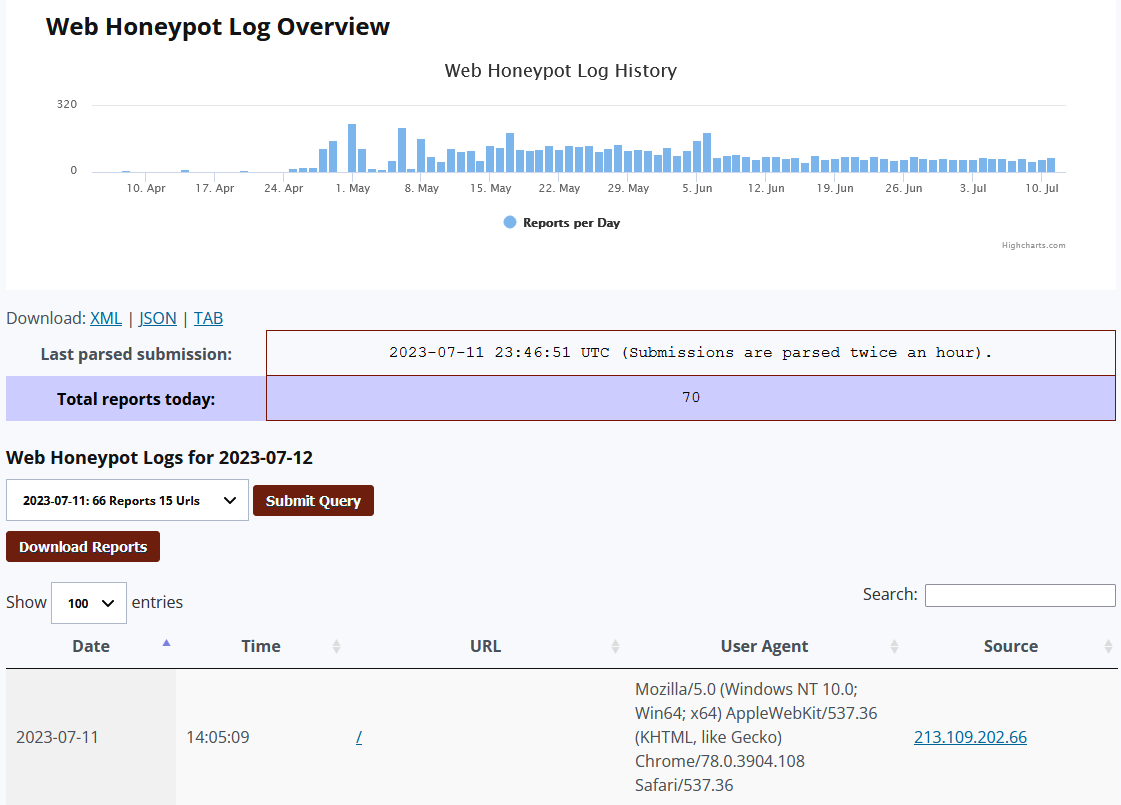

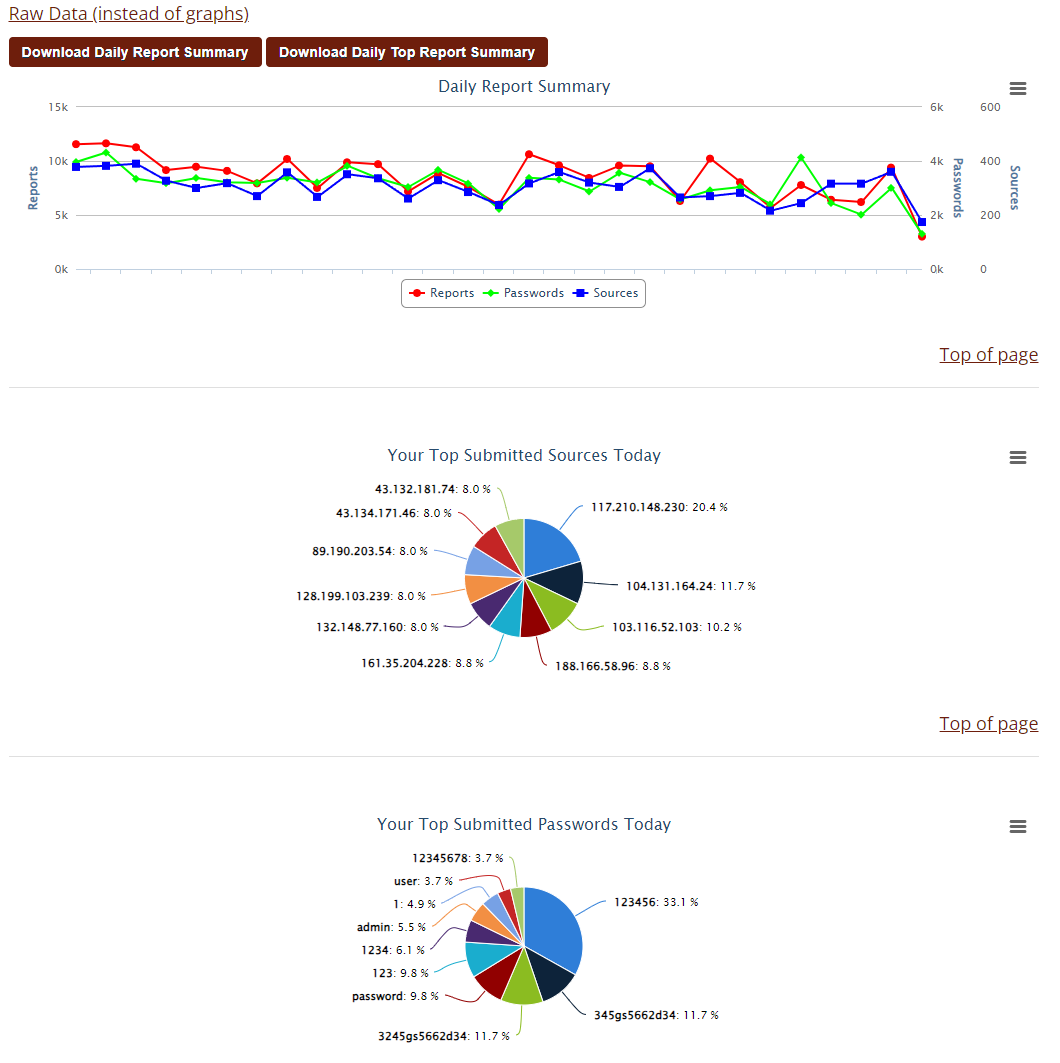

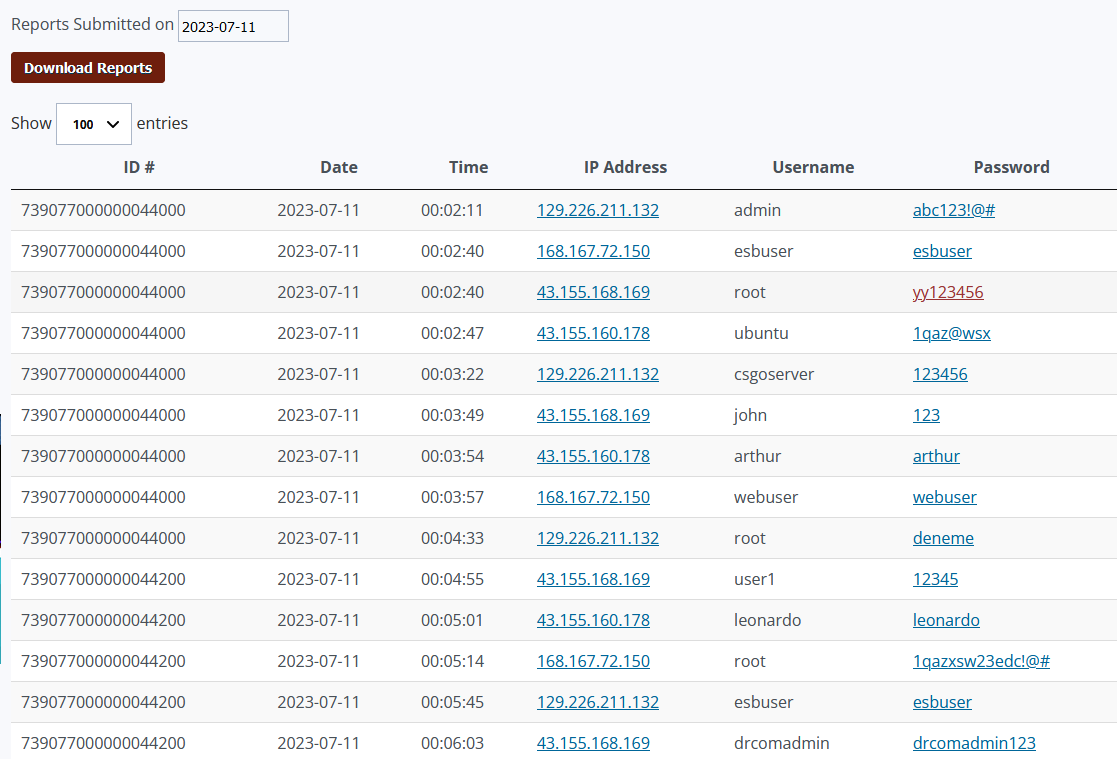

The nice thing is that after setting up your DShield honeypot [2], data is available very quickly in your user portal. There is easy access to:

- firewall logs [3]

- web logs [4]

- ssh/telnet logs [5] [6]

Much of this data can also be downloaded in raw format, but may not have much data as other logs locally available on the honeypot. Sometimes having the local honeypot data can help to review data over longer periods of time.

Figure 1: Example of honeypot firewall logs in user portal

Figure 2: Example of honeypot web logs in user portal

Figure 3: Example of honeypot ssh/telnet graphs in user portal

Figure 4: Example of honeypot raw ssh/telnet data in user portal

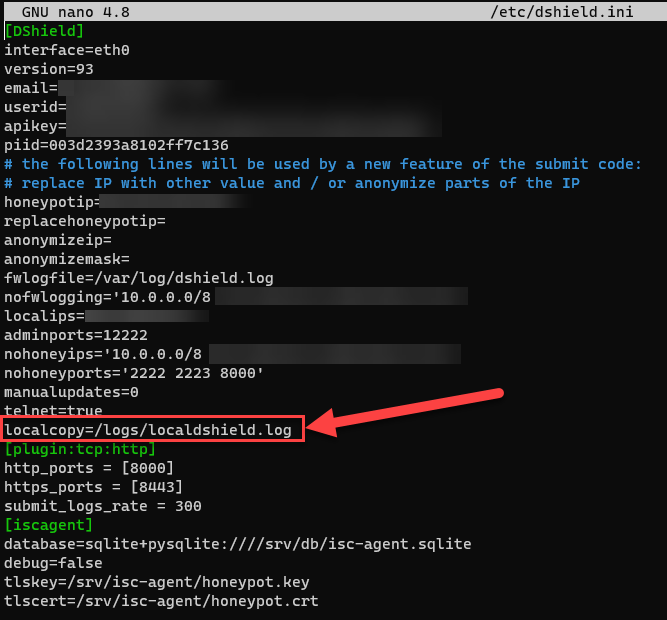

Add additional logging options to dshield.ini

Default logging location: /var/log/dshield.log (current day)

One small change in /etc/dshield.ini will generate a separate log file that will basically have unlimited data retention until the file is moved or cleared out. The file used by default for firewall log data in /var/log/dshield.log will only show data from the current day. Simply add the line:

localcopy=<path to new log file>

Figure 5: Example modification of /etc/dshield.ini file to specify an additional logging location

In my example, I wanted to save my /logs/localdshield.log.

Note: Previous versions of the DShield honeypot used to store web honeypot data in this file as well. This has been moved to a new JSON file.

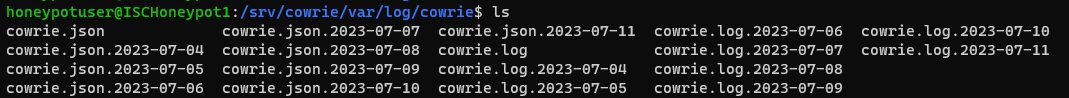

Make copies of cowrie JSON logs

Default logging location: /srv/cowrie/var/log/cowrie/ (last 7 days, 1 file per day)

The cowrie files can be very helpful since they not only store usernames and passwords attempted, but also the commands attempted by bots or users that receive console access via cowrie. In addition, information about file uploads and downloads also reside within this data. Much more information is in the raw JSON file than are in the ISC user portal if there is an interest in reviewing it.

The cowrie logs are rotated out and only the last 7 days are stored. Similar to the firewall data, I also want to store my archive of cowrie JSON files in my /logs directory by setting up an entry in my crontab [7].

# open up editor to modify crontab for a regularly scheduled task

# -e option is for "edit"

crontab -e

# m h dom mon dow command

# option will copy all *.json files to /logs/ directory daily at 12AM

0 0 * * * cp /srv/cowrie/var/log/cowrie/cowrie.json* /logs/

One benefit of the cowrie log files is that the file names are based on the date of activity. This means there's no need for creative file renaming or rotations to get around accidentally overwriting data.

Figure 6: Cowrie logs files stored on the DShield honeypot

Make copies of web honeypot JSON logs

Default logging location: /srv/db/webhoneypot.json (currently no retention limit)

There is another file in this location, isc-agent.sqlite, although the data within that SQLite database is cleared out very quickly once submitted. Similar to the cowrie logs, setting up a task in crontab can help retain some of this data.

# open up editor to modify crontab for a regularly scheduled task

# -e option is for "edit"

crontab -e

# m h dom mon dow command

# option will copy all data witin the /srv/db/ folder to /logs/ directory daily at 12AM

0 0 * * * cp /srv/db/*.* /logs/

Some improvements could be made to this. Since all the data is in one large file, it could be easily overwritten if logging changes. Doing a file move and adding the current date to the file would be easy enough. A new file would be created and each file would have the last 24 hours of data. There may be an issue if the file is being actively written to at the time, but would likely be rare. Either way, improvements can be made.

Process cowrie logs with cowrieprocessor

Rather than manually reviewing the cowrie JSON files or using /srv/cowrie/bin/playlog to view tty files, this python script helps me do the following:

- summarize attacks

- enrich attacks with DShield API, Virustotal, URLhaus, SPUR.us

- outline commands attempted

- upload summary data to dropbox

Session 3d50bd262304

Session Duration 12.38 seconds

Protocol ssh

Username shirley

Password shirley123

Timestamp 2023-07-11T11:36:53.459135Z

Source IP Address 181.204.184.242

URLhaus IP Tags

ASNAME EPM Telecomunicaciones S.A. E.S.P.

ASCOUNTRY CO

Total Commands Run 22

SPUR ASN 13489

SPUR ASN Organization EPM Telecomunicaciones S.A. E.S.P.

SPUR Organization Colombia Mvil

SPUR Infrastructure MOBILE

SPUR Client Types ['DESKTOP', 'MOBILE']

SPUR Client Count 1

SPUR Location Santiago de Cali, Departamento del Valle del Cauca, CO

------------------- DOWNLOAD DATA -------------------

Download URL

Download SHA-256 Hash ff6f81930943c96a37d7741cd547ad90295a9bd63b6194b2a834a1d32bc8f85d

Destination File /var/tmp/.var03522123

VT Description JavaScript

VT Threat Classification

VT First Submssion 2009-03-03 07:33:11

VT Malicious Hits 0

Download URL

Download SHA-256 Hash 56fb4d88c89b14502279702680b0f46620d31e28e62467854fcedecbfea760be

Destination File /tmp/up.txt

VT Description Text

VT Threat Classification

VT First Submssion 2023-07-11 11:40:02

VT Malicious Hits 0

------------------- UPLOAD DATA -------------------

Upload URL

Upload SHA-256 Hash e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855

Destination File dota.tar.gz

VT Description unknown

VT Threat Classification

VT First Submssion 2006-09-18 07:26:15

VT Malicious Hits 0

////////////////// COMMANDS ATTEMPTED //////////////////

# cat /proc/cpuinfo | grep name | wc -l

# echo -e "shirley123\nh839XJsluyqc\nh839XJsluyqc"|passwd|bash

# Enter new UNIX password:

# echo "shirley123\nh839XJsluyqc\nh839XJsluyqc\n"|passwd

# echo "321" > /var/tmp/.var03522123

# rm -rf /var/tmp/.var03522123

# cat /var/tmp/.var03522123 | head -n 1

# cat /proc/cpuinfo | grep name | head -n 1 | awk '{print $4,$5,$6,$7,$8,$9;}'

# free -m | grep Mem | awk '{print $2 ,$3, $4, $5, $6, $7}'

# ls -lh $(which ls)

# which ls

# crontab -l

# w

# uname -m

# cat /proc/cpuinfo | grep model | grep name | wc -l

# top

# uname

# uname -a

# lscpu | grep Model

# echo "shirley shirley123" > /tmp/up.txt

# rm -rf /var/tmp/dota*

Just like a lot of the other options, automation of this process was completed by using crontab, performing the process a few times a day. Options are available on GitHub [1].

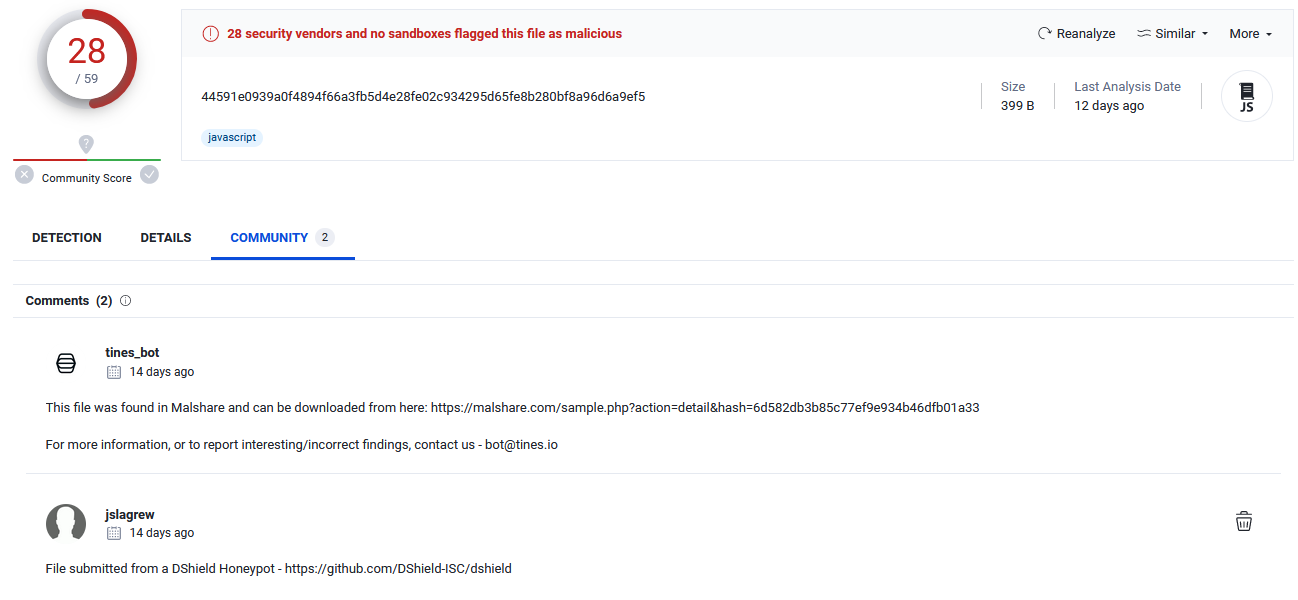

Upload new files to virustotal

I set up regular uploads of file data to Virustotal to help with data encrichment for cowrieprocessor. If data isn't uploaded, it's hard to have any data about the file. It also means that every now and again, a file is uploaded for the first time by one of my honeypots. It's also great to be able to share artifacts with the community.

An example python script is also available on GitHub [8].

Figure 7: Example upload of file to Virustotal [9]

Capture PCAP data using tcpdump

We've already seen quite a bit of data that can be used to analyze activity on a DShield honeypot. An additional artifact that's very easy to collect is network data directly on the honeypot. This can also be easily accomplished through crontab by scheduling tcpdump to run on boot. This is especially important since the honeypot will reboot daily and the tcpdump process will need to be restarted.

# crontab entry

# restart tcpdump daily at 12AM

0 00 * * * sudo /dumps/stop_tcpdump.sh; sudo /dumps/grab_tcpdump.sh

# start tcpdump 60 seconds after boot

@reboot sleep 60 && sudo /dumps/grab_tcpdump.sh

# /dumps/stop_tcpdump.sh file contents

#Stop tcpdump command

PID=$(/usr/bin/ps -ef | grep tcpdump | grep -v grep | grep -v ".sh" | awk '{print $2}')

/usr/bin/kill -9 $PID

# /dumps/grab_tcpdump.sh contents

TIMESTAMP=`date "+%Y-%m-%d %H:%M:%S"`

tcpdump -i eth0 -s 65535 port not 12222 and not host <ip used for remote access/transfers> -w "/dumps/$TIMESTAMP tcpdump.pcap"

A variety of things can be done with this network data and some of this I've explored in previous diaries [10].

Backup honeypot data

I often use backups of data for use on a separate analysis machine. These backups are also password protected since there backups will contain malware and may be quarantined by antivirus products. It's also a good protection against accidentally opening one of those files on machine that wasn't intended for malware analysis. The configuration data can also help with setting up a new honeypot. In addition, it is a great opportunity to save space on a honeypot. I personally like to backup my data before DShield honeypot updates or other changes.

# backup honeypot data, using "infected" for password protection

zip -r -e -P infected /backups/home.zip -r /home

zip -r -e -P infected /backups/logs.zip -r /logs

zip -r -e -P infected /backups/srv.zip -r /srv

zip -r -e -P infected /backups/dshield_logs.zip -r /var/log/dshield*

zip -r -e -P infected /backups/crontabs.zip -r /var/spool/cron/crontabs

zip -r -e -P infected /backups/dshield_etc.zip /etc/dshield*

zip -r -e -P infected /backups/dumps.zip -r /dumps

# clear out PCAP files older than 14 days to save room on honeypot

# 14 days is about 6 GB on a honeypot, about 425 MB/day

find /dumps/*.pcap -mtime +14 -exec rm {} \;

The process of setting this up is relatively quick, but can be improved. Some future improvements I'd like to make:

- rotate web honeypot logs to keep one day of logs per file (similar to cowrie logs)

- automate backups and send to dropbox or another location

- update virustotal upload script to use newer API and also submit additional data such as download/upload URL, download/upload IP, attacker IP (if different)

- update virustotal script to only submit when hash not seen before on virustotal

- foward all logs (cowrie, web honeypot, firewall) to SIEM (ELK stack most likely)

- automate deployment of all of these changes to simplify new honeypot setup

Please reach out with your ideas!

[1] https://github.com/jslagrew/cowrieprocessor

[2] https://isc.sans.edu/honeypot.html

[3] https://isc.sans.edu/myreports

[4] https://isc.sans.edu/myweblogs

[5] https://isc.sans.edu/mysshreports/

[6] https://isc.sans.edu/myrawsshreports.html?viewdate=2023-07-11

[7] https://man7.org/linux/man-pages/man5/crontab.5.html

[8] https://github.com/jslagrew/cowrieprocessor/blob/main/submit_vtfiles.py

[9] https://www.virustotal.com/gui/file/44591e0939a0f4894f66a3fb5d4e28fe02c934295d65fe8b280bf8a96d6a9ef5/community

[10] https://isc.sans.edu/diary/Network+Data+Collector+Placement+Makes+a+Difference/29664

--

Jesse La Grew

Handler

Comments