Survival time for web sites

Many, many years ago we (SANS Internet Storm Center) published some interesting research about survival time of new machines connected to the Internet. Back then, when Windows XP was the most popular operating system, it was enough to connect your new machine to the Internet and get compromised before you managed to download and install patches. Microsoft changed this with Windows XP SP2, which introduced the host based firewall that was (finally) enabled by default, so a new user had a better chance of surviving the Internet.

We still collect and publish some information about survival time, and you can see that at https://isc.sans.edu/survivaltime.html.

Now, 20 years after, most of us do not have our workstations and laptops connected directly to the Internet, however new web sites get installed and put (on the Internet) every second. I recently had to put several web sites up and was surprised as how fast certain scans happened so I decided to do some tests on survival time of new web sites.

Certificate transparency

Today, when you setup a new web site, one of mandatory steps is to get an SSL/TLS certificate for your web site. We have come a long way from initial certificates which were very expensive to today’s Let’s Encrypt certificates, that allow anyone to obtain a certificate that is trusted by any major browser, completely for free.

In order to make the certificate issuance transparent and verifiable, the Certificate Transparency (CT) ecosystem was created, by some of the biggest players in this field (Apple, Google, Facebook …).

Certificate Transparency allows monitoring of what certificates are issued by a Certificate Authority. This is done by publishing information about every single issues certificate into publicly available logs, which are append only, built using Merkle trees, and tamper-proof.

The idea is great, and is today widely accepted by all major browsers – they actually require that the certificate has been added to the Certificate Transparency (CT) log before accepting this certificate. This is generally done by adding Signed Certificate Timestamp (SCT) extension to a certificate.

Now, since the whole database is public (remember, transparency above), we can even query it. Good folks are Cali Dog even created a web page, and a CLI tool that allows us to query the database in real time (https://certstream.calidog.io/), while you can always see historical data at Censys (https://search.censys.io/) or CRT.sh (https://crt.sh/).

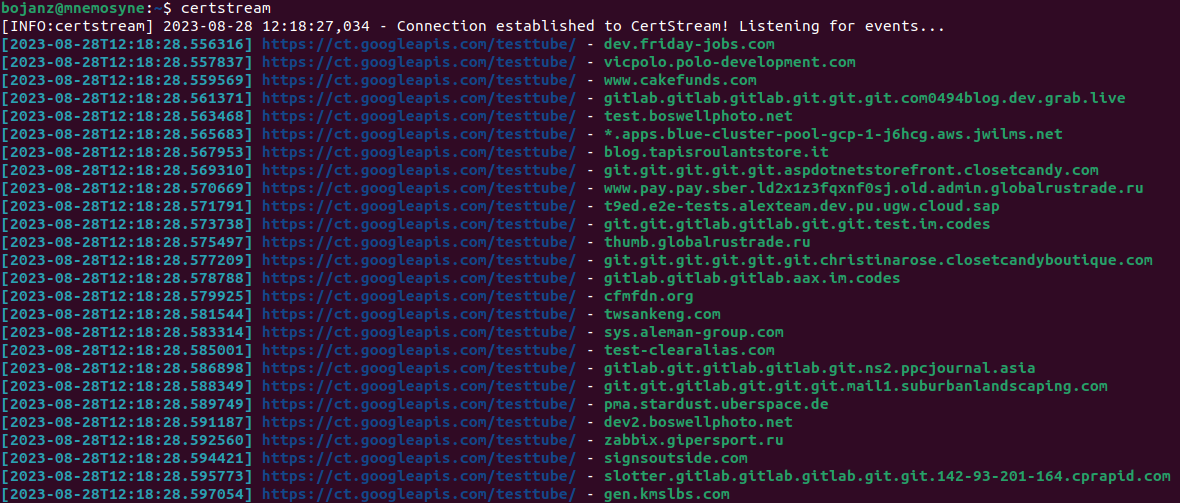

For our survival time, it is the real-time feed that is interesting. Once you install the client tool for certstream, you can just start it and see zillions of new domains flow in, as shown below:

Survival time

This is what prompted me to check the survival time of web sites – basically, as soon as a new certificate has been issued by a CA participating in Certificate Transparency (and that’s virtually any trusted CA today), information about the issued certificate is public!

I did a little test – I started certstream and was looking only for my domains and then issued a request for the site1.isc.mydomain.com domain to Let’s Encrypt. It took couple of seconds for the request to result in a new certificate, and almost instantly I saw the record appear in certstream:

[2023-08-28T11:34:33.988190] https://oak.ct.letsencrypt.org/2023/ - site1.isc.mydomain.com

This was all sub-second, and is OK and expected. However, what I did not expect was to see the following logs appear on my server, again almost instantly:

134.122.89.242 - - [28/Aug/2023:09:34:34 +0000] "GET / HTTP/1.1" 302 5685 "-" "-"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET / HTTP/1.1" 302 5685 "-" "Mozilla/5.0 (Linux; Android 6.0; HTC One M9 Build/MRA535528) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2892.98 Mobile Safari/537.3"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /.vscode/sftp.json HTTP/1.1" 302 5685 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /about HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /debug/default/view?panel=config HTTP/1.1" 302 1102 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /v2/_catalog HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /ecp/Current/exporttool/microsoft.exchange.ediscovery.exporttool.application HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /server-status HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /login.action HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /.DS_Store HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /.env HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /.git/config HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /s/632313e2733313e2430313e2237313/_/;/META-INF/maven/com.atlassian.jira/jira-webapp-dist/pom.properties HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /config.json HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /telescope/requests HTTP/1.1" 302 1076 "-" "Go-http-client/1.1"

134.122.89.242 - - [28/Aug/2023:09:34:35 +0000] "GET /?rest_route=/wp/v2/users/ HTTP/1.1" 302 1126 "-" "Go-http-client/1.1"

My brand new site was almost scanned instantly by a scanner running at Digital Ocean - and this was only the first scan, which happened to be done by https://leakix.org - soon I observed dozens of other requests.

Since the scanner new exactly what virtual site to send a request to, and the request was sent almost immediately after I got the certificate, it is clear that attackers are also monitoring the Certificate Transparency database and that they are scanning new sites immediately as they appear online.

If we look at the requests above, we can see that they are trying to pickup some low hanging fruit – which makes sense, they actually want to catch misconfigured websites before an administrator had a chance to configure them (unless they did this before issuing the certificate, as they should).

Increasing visibility

It is clear that attackers will abuse anything they get their hands on. As survival time, back 20 years ago was getting close to 10 seconds for a new Windows XP machine, we can see that today, for web sites, it’s almost even less than that. Of course, if an administrator properly configures and hardens their web site, all will be good, but we can expect to see some scanning almost instantly as we are on the Internet.

Why not using this to our advantage as well? One of the recommendations I always give organizations to protect themselves is to monitor if there are any certificates issued with your own organization name in them!

For example, we could run certstream and just grep our organization’s name (provided it is unique enough). We might detect certificates we were not aware of, both issued for legitimate purposes, but also those requested by attackers, perhaps for phishing campaigns.

So, let use this data as much as we can. Let us know if you have any other good use cases for Certificate Transparency data!

| Web App Penetration Testing and Ethical Hacking | London | Mar 2nd - Mar 7th 2026 |

Comments